Gen AI Astronaut

Crusoe is excited to announce the launch of new compute virtual machines (VMs) in Crusoe Cloud based on NVIDIA L40S GPUs.

The new l40s-48gb instances, powered by up to 10 NVIDIA L40S GPUs per instance, are designed to provide customers up to 30% better price/performance compared to current-generation GPU platforms for generative AI use cases, including image generation, text summarization and AI chatbots. NVIDIA L40S-powered VMs also provide customers similar price/performance gains for professional graphics use cases, including 3D rendering and virtual production.

NVIDIA L40S GPU for image and text generation

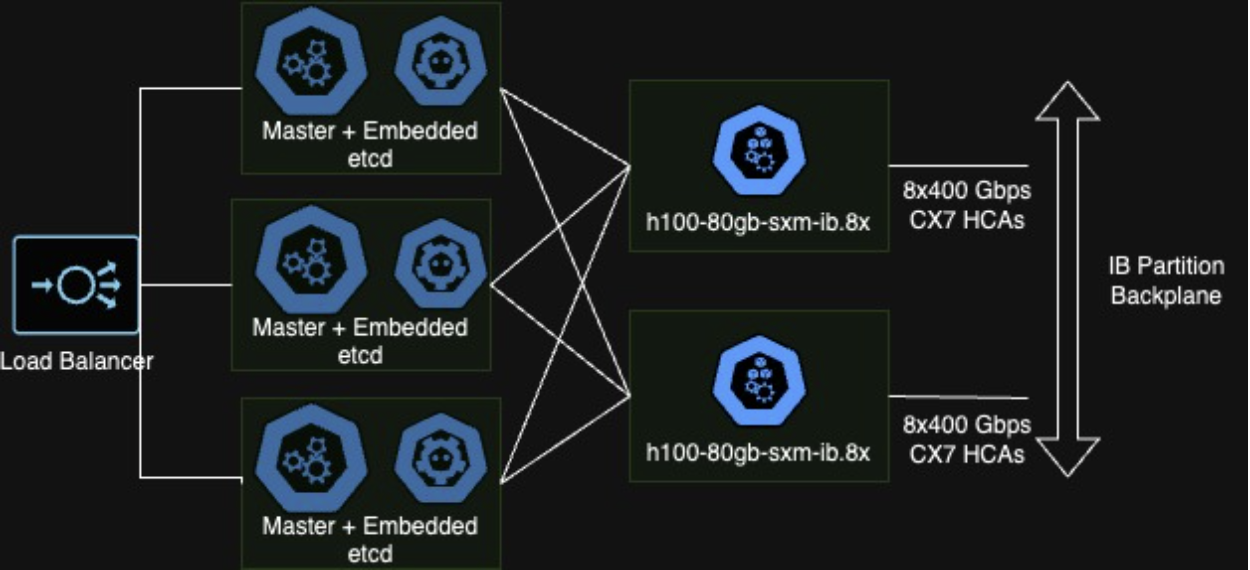

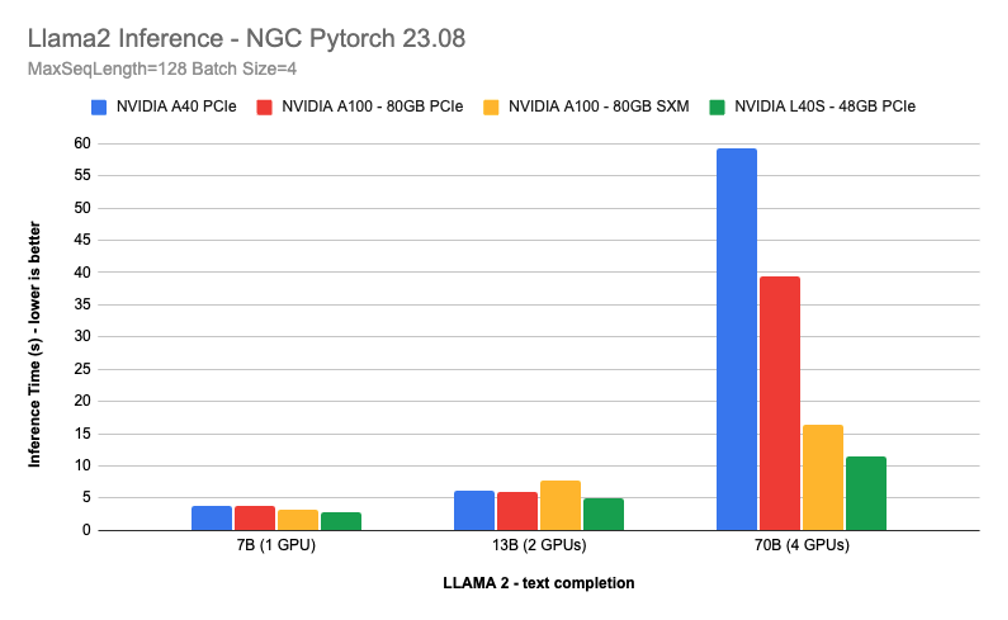

A proven use case for NVIDIA L40S GPUs is generative AI inference using Meta’s Llama2 model. Across varying model sizes, using a sequence length of 128 and batch size of 4, Crusoe benchmarked inference time on 4 different GPU VM types offered in Crusoe Cloud today: NVIDIA A40, NVIDIA A100 Tensor Core (PCIe and SXM) and NVIDIA L40S GPUs.

NVIDIA L40S-powered VMs outperformed the pack with as much as 30% lower inference time compared to the other GPU types.

An additional proven generative AI use case is image generation via Stable Diffusion - in this case, SDXL. Following this tutorial, our team was able to generate images[A6] at fp16 at 3.3 images/sec, running on an l40-48gb.1x VM powered by a single L40S GPU. Below is an image generated from the classic test prompt, “a photo of an astronaut riding a horse on mars.”

Leveraging 8 bits for fun and cost savings

Since the NVIDIA L40S provides native fp8 and int8 support, Crusoe also benchmarked using int8, using support recently added to the NVIDIA TensorRT software development kit. Our team saw a throughput of 2.8 images/sec, for a roughly 15% speedup over fp16. As you can see, the quality is slightly lower (e.g. the visor is less reflective), but still very good.

fp8 and int8 are useful tools in the toolbox for improving cost per inference, especially where models can losslessly (or near-losslessly) quantize. For example, outputs and accuracy for fp8 and fp16 for llama2-13b are nearly identical, so this is a practically lossless quantization; however, the throughput will increase significantly!

NVIDIA L40S GPU for serverless providers

Crusoe’s unique 10-GPU, high-memory configuration will enable serverless GPU providers offering multiple models (either multiple open-source models or per-customer fine tunes) to provide very fast model switching, keeping many models in system memory and swapping them into GPU memory as desired. This configuration provides a low cost per inference; creating savings while enabling increased efficiency.

If you are a serverless GPU provider, reach out to [email protected] to test out NVIDIA L40S GPUs on your platform!

NVIDIA L40S GPUs: Not Just for Inference

Lastly, while we see the best performance gains in generative AI and inference workloads, NVIDIA L40S GPUs aren’t just for inference, as supported by Eric Hartford’s latest finetune, dolphin-2.8-mistral-7b-v02. Eric was able to fine-tune Mistral 7b with a 32k context window in under three days, running on an l40-48gb.10x instance powered by 10 L40S GPUs, at a cost of just under $1000. Such instances are an ideal blend of price/performance for fine tunes like these.

If you’re looking for compute sponsorship for a fine tune, especially of a model like Grok or DBRX, please reach out to [email protected].

Get started with L40S GPUs on Crusoe Cloud

Customers can launch NVIDIA L40S-powered VMs in Crusoe Cloud with up to 10x NVIDIA L40S 48GB GPUs, 80 vCPUs (AMD Genoa), 1470 GB system memory and 200Gbps networking throughput. NVIDIA L40S-powered VMs are offered starting at $1.45 / GPU-hr for on-demand usage, with discounts available for committed usage. Full specifications are available in our documentation, and pricing is available at crusoe.ai/cloud/pricing. To get started using L40S GPUs, please request access to Crusoe Cloud.

For any questions, or to learn more about Crusoe Cloud, please visit our website or contact sales.